ABSTRACT

OBJECTIVE: To develop a deep learning system to classify non-small cell lung cancer (NSCLC) by histologic subtype—adenocarcinoma or squamous cell carcinoma (SCC)—from computed tomography (CT) images in which the tumor regions were segmented, comparing our results with those of similar studies conducted in other countries and evaluating the accuracy of automated classification by using data from the Instituto Nacional de Câncer, Brazil.

MATERIALS AND METHODS: To develop the classification system, we employed a 2D U-Net neural network for semantic segmentation, with data augmentation and preprocessing steps. It was pretrained on 28,506 CT images from The Cancer Image Archive, a private database, and validated on 2,015 of those images. To develop the classification algorithm, we used a VGG16-based network, modified for better performance, with 3,080 images of adenocarcinoma and SCC from the Instituto Nacional de Câncer database.

RESULTS: The algorithm achieved an accuracy of 84.5% for detecting adenocarcinoma and 89.6% for detecting SCC, with sensitivities of 91.7% and 90.4%, respectively, which are considered satisfactory when compared with the values obtained in similar studies.

CONCLUSION: The system developed appears to provide accurate automated detection, as well as tumor segmentation and classification of NSCLC subtypes of a local population using deep learning networks trained using public image data sets. This method could assist oncological radiologists by improving the efficiency of preliminary diagnoses

Keywords:

Carcinoma, non-small-cell lung; Image processing, computer-assisted/methods; Segmentation; Semantics; Carcinoma/classification; Deep learning.

RESUMO

OBJETIVO: Desenvolver um sistema baseado em técnicas de aprendizado profundo para classificação dos principais subtipos histológicos de câncer de pulmão não pequenas células (CPNPC) – adenocarcinoma e carcinoma de células escamosas (CCE) – usando imagens tomográficas. O sistema desenvolvido segmenta o tumor nas imagens, classificando as lesões em adenocarcinoma e CCE. Comparamos os resultados com estudos similares e avaliamos a precisão na classificação automática dos subtipos de CPNPC utilizando imagens fornecidas pelo Instituto Nacional de Câncer.

MATERIAIS E MÉTODOS: A rede usada para segmentação semântica foi baseada na arquitetura 2D U-Net com etapa de pré-processamento utilizando técnicas de aumento de dados. Esta rede foi pré-treinada com 28.506 imagens e validada com 2.015 imagens usando base de dados de um repositório público de informações de imagens conhecido internacionalmente como The Cancer Image Archive. Para classificação foi utilizada rede baseada na arquitetura VGG16, modificada para melhorar o desempenho, usando 3.080 imagens de adenocarcinoma e CCE do Instituto Nacional de Câncer.

RESULTADOS: O sistema fornece detecção da lesão com precisão de 84,5% para adenocarcinoma e 89,6 para CCE, com sensibilidade de 91,7% e 90,4%, respectivamente, considerada adequada quando comparada com estudos semelhantes.

CONCLUSÃO: Este sistema oferece um método preciso de detecção, segmentação e classificação para um conjunto reduzido de pacientes, que pode ajudar radiologistas na análise de imagens e acelerar o diagnóstico preliminar.

Palavras-chave:

Carcinoma pulmonar de células não pequenas; Processamento de imagem assistida por computador; Segmentação; Semântica; Carcinoma/classificação; Aprendizagem profunda.

INTRODUCTION

Early cancer detection influences the disease outcome(1). That motivated the present study, which aims to aid in the classification of the predominant histologic subtypes of lung cancer. Lung cancer is a major public health issue in Brazil. According to the Brazilian Instituto Nacional de Câncer (INCA, National Cancer Institute), a combined 32,560 cases of lung, trachea, and bronchial cancer were expected in 2024(2). Non-small cell lung cancer (NSCLC) is the most common type, accounting for 85% of all lung cancer cases. There are two main subtypes of NSCLC: adenocarcinoma and squamous cell carcinoma (SCC). In 60% of cases, NSCLC is detected at an advanced stage, resulting in a global 5-year survival rate of only 10–15%(3,4). Biopsy, albeit essential for confirming malignancy, has limitations due to the small size of the specimens and therefore might may not fully capture the heterogeneity of a given tumor. Accurate delineation of the tumor is a crucial first step in its classification(5). Because stable, accurate segmentation is critical, an automated, reproducible lung tumor delineation algorithm would facilitate that classification. In addition, some images cannot be segmented in only one step, and additional steps are therefore required; in many cases, the radiologist must scroll through many computed tomography (CT) slices to determine what part of the segmentation is missing(6). Medical image segmentation generally consists of two related tasks: object recognition and object delineation. Accurate image segmentation methods are vital for proper disease detection, histologic classification of tumors, diagnosis, treatment planning, and follow-up. In addition to the conventional region-of-interest analysis, an accurate image segmentation method is often needed for diagnostic or prognostic assessment(7–10).

Deep learning applications

Deep learning (DL) techniques have been increasingly used in order to address challenges in the detection and classification of tumors, requiring large annotated data sets for effective learning(11). Recent advancements include hybrid regional networks for automated tumor segmentation from positron-emission tomography (PET)/CT images(12), fully automated pipelines for volumetric segmentation of NSCLC(13), and computer-aided diagnostic methods for CT images(14). The World Health Organization emphasizes the importance of staging and histologic classification for treatment and prognosis(15). Networks employing DL could enhance the classification of NSCLC subtypes by leveraging various image data sets, tailored for both public and local populations.

MATERIALS AND METHODS

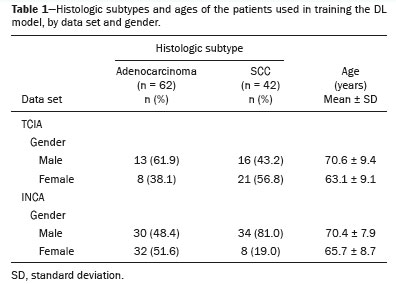

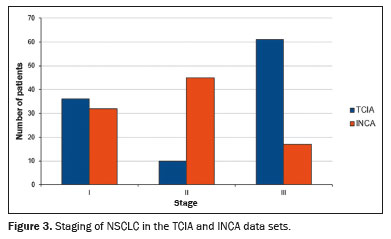

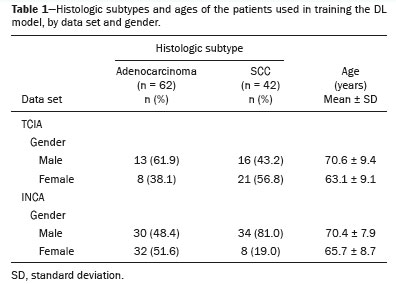

This work was approved by the INCA Board of Ethics (Reference no. 6.331.223; 14/09/2022). We analyzed 2,005 examinations in the INCA database with the predominant histologic subtypes, of which 1,172 (58.5%) were adenocarcinoma and 833 (41.5%) were SCC. The criteria for patient selection were as follows: having a tumor classified as stage I, II or III; with having a tumor for which the largest diameter was ≤ 7 cm; having no metastases; and CT image acquisition and classification of the histologic subtype having occurred within two weeks of each other. From the INCA database, we selected 104 patients to use for the validation—62 with adenocarcinomas and 42 with SCCs—which reflects a ratio between the subtypes similar to that of the sample as a whole. For the classification, we selected 62 INCA patients: 30 with adenocarcinomas and 32 with SCCs. For validation, we used 81 patients registered in The Cancer Image Archive (TCIA): 41 with adenocarcinomas and 40 with SCCs. Thus, we maintained the balance of the data sets for each subtype.

The pipeline developed for this research uses two convolutional neural networks (CNNs) written in Python, version 3.9, using the Tensor Flow and Keras frameworks for model implementation and the scikit-learn library for the evaluation model. The first CNN employs a 2D U-Net architecture(16) that uses convolutional layers to detect local features in input images. Each convolutional layer connects to a small subset of spatially connected neurons, with shared connection weights enhancing the detection of local structures. This architecture includes pooling layers to reduce computational complexity and extract hierarchical image features. The second CNN is based on the VGG16 model proposed by Simonyan & Zisserman(17). This model, noted for its high performance on ImageNet(18,19), features a deep network with 13 convolutional layers, five max-pooling layers, and three dense layers. The VGG16 design includes smaller filters and increased depth and filter count after each max-pooling layer. Transfer learning is used to adjust the final layers for improved classification of adenocarcinoma and SCC. The DL algorithm was developed in Python using the Keras API and TensorFlow framework; all tasks were performed on a system with an 8th generation Intel i7 processor, 16 GB RAM, and a NVIDIA GeForce GTX 1060 graphics processing unit (GPU).

Data set

For the purposes of this study, we employed TCIA(20), a public database which, at our access, contained 442 scans of patients with NSCLC, including clinical annotations for radiomics. This database also provides the ground truth segmentation images of the NSCLC tumors, defined by a specialist board, representing the tumor label with the same shape as the corresponding CT image. For training, 122 scans were selected from the TCIA data set and categorized as adenocarcinoma, SCC, or “not otherwise specified”. For external validation, we used 104 PET/CT scans from INCA patients diagnosed with NSCLC between 2016 and 2020. These scans averaged 600 slices each, from a Philips Gemini TF PET/CT scanner with standardized scanning protocols, with a tube voltage of 120 kVp, a tube current of 213 mAs, mediastinal window settings, and a slice thickness of 1 mm, without contrast.

Preprocessing

The preprocessing steps aimed to optimize data for training(21) and conform to GPU memory limitations. Original images (512 × 512 pixels) were resized (to 256 × 256 pixels) to retain quality while ensuring sufficient spatial resolution. The preprocessing consisted of isolating the lung regions by morphological effects (erosion and dilation) after applying a threshold. A mask for the lung region only was then applied to the original image, and a masked image was applied to the input network. This procedure was also used on the INCA image data set during the validation.

Data augmentation

The TCIA data set initially contained 10,025 images, of which only 1,057 contained NSCLC information. Geometric transformations were applied to address this imbalance and enhance training. Horizontal mirroring increased the data set to 20,050 samples. Additional augmentations(22), including rotations (10° and −10°) and elastic deformations (random displacement fields), further expanded the data set to 28,506 images. This same data augmentation sequence was used on the INCA database images only in the training stage of the classification network.

Metrics

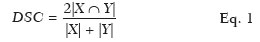

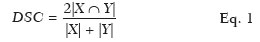

To evaluate the results from the DL algorithm, some metrics were calculated. For the validation testing of the segmentation results using the TCIA database, we calculated the Dice similarity coefficient (DSC), a statistical index that evaluates the similarity between two sets of data and has become one of the most widely used tools in the validation of image segmentation algorithms(20), as follows:

where |

X| is the area of the ground truth segmentation, |

Y| is the area of the semantic segmentation result, and 2|

X ∩

Y| is the area of overlap (intersection) between |

X| and |

Y|.

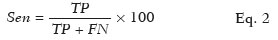

To evaluate the performance of the algorithm in the detection and classification of NSCLC tumors, we calculated the sensitivity, specificity, and accuracy as shown in Eqs. 2, 3, and 4, respectively:

where

Sen is the sensitivity,

TP is the number of true-positive results (i.e. , segmented slices in which a nodule was detected and classified as adenocarcinoma or SCC, subsequently being classified as adenocarcinoma or SCC on histology), and

FN is the number of false-negative results (i.e. , segmented slices in which a nodule was detected and classified as noncancerous, subsequently being classified as adenocarcinoma or SCC on histology).

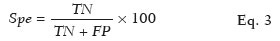

where

Spe is the specificity,

TN is the number of true-negative results (i.e. , segmented slices in which a nodule was detected and classified as noncancerous, subsequently being classified as noncancerous on histology) and

FP is the number of false-positive results (i.e. , segmented slices in which a nodule was detected and classified as cancerous, subsequently being classified as noncancerous on histology).

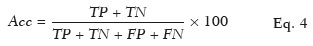

where

Acc is the accuracy,

TP +

TN is the total number of slices that were classified correctly, and

TP +

TN +

FP +

FN is the overall total number of slices evaluated.

TrainingThe use of a GTX 1060 GPU accelerated the training process approximately 16-fold in comparison with training without a GPU. Two learning rates (10

−3 and 10

−6) were tested, and the best efficiency was achieved at the 10

−3 rate. In this work, the adaptive moment estimation algorithm optimizer was also used, in order to minimize the cost function (cross-entropy) and maximize the DSC, being considered the optimizer that has been shown to converge faster. Adaptive moment estimation optimizer is an adaptive learning rate optimization algorithm specifically designed to train deep neural networks

(23). The learning runtime was about 12 h for batches of 8 or 18 images.

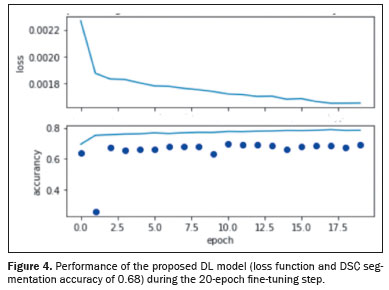

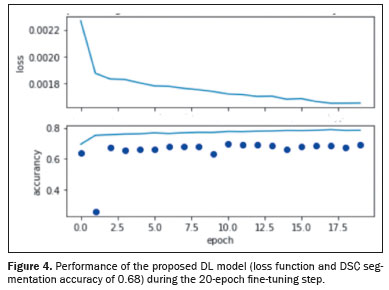

Initially, the 2D U-Net was pretrained from scratch by using 28,506 images from the TCIA NSCLC database with 40 epochs. After this initial learning step, a fine-tuning transfer learning process was applied to the 2D U-Net to improve the DSC performance. This fine-tuning learning step used an additional data set of 497 tumor-only images of 22 other patients from the TCIA NSCLC database. This extra learning step used an additional 20 epochs to improve the DSC performance of the 2D U-Net. We consider this adequate for detecting images with tumors without impairing the detection of true negatives, which account for the majority (77%) of the images.

SegmentationSemantic segmentation consists of separating an image into different regions and allocating each pixel to the part of the image to which it belongs. The NSCLC cases were detected by using the U-Net architecture. An additional 2,015 images containing NSCLC ground truth segmentation (17 different patient cases with NSCLC adenocarcinoma and SCC subtypes) were selected from the TCIA data set to validate the segmentation results.

Figure 1 shows the steps in the semantic segmentation process, from the initial learning to the validation test: the 2D U-Net pretraining on the 28,506 images from the TCIA database; the initial validation test with segmentation metrics (DSC) related to the 2,015 images from the TCIA database; the fine-tuning step with 497 tumor-only images from the TCIA database to improve the segmentation performance of the 2D U-Net; and the final NSCLC validation test with 85,678 images from 104 INCA patients. The results were compared with the annotations available on patient electronic medical records to evaluate sensitivity.

DetectionThe NSCLC cases were detected by comparing the binary image outputs with the annotations available on patient electronic medical records to evaluate sensitivity.

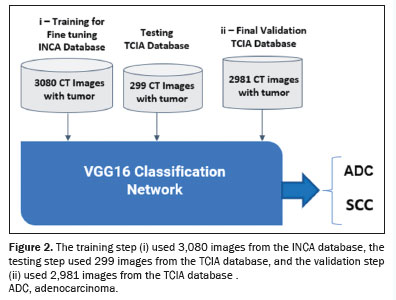

ClassificationTo classify the NSCLC histologic subtype, we used the VGG16 architecture, with performance metrics including accuracy and the area under a receiver operating characteristic curve. Three learning rates (10

−7, 10

−8, and 10

−9) were tested, and the best accuracy was achieved with the 10

−8 rate. The process involved the following: fine-tuning training with 3,080 images from the INCA database and testing with 299 images from the TCIA database; validation using 2,981 images from the TCIA database; image preprocessing including centering, resizing to 112 × 112 pixels, and conversion to the red-green-blue color space; and data augmentation similar to what was used in the previous steps. Figure 2 illustrates the fine-tuning training with 3,080 images from the INCA database and the final validation with 2,891 images from the TCIA database.

RESULTSTable 1 shows TCIA and INCA epidemiological information from patients with each of the predominant histologic subtypes used in order to train the segmentation and detection DL algorithms. The pretraining step used 40 epochs, and the training process as a whole used 60 accumulated epochs.

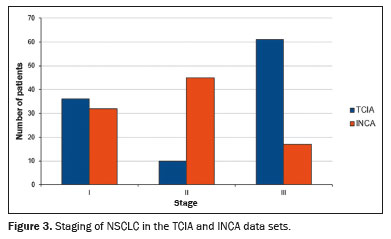

Figure 3 shows relevant clinical factors: the NSCLC patient staging for the early stage (stage I and stage II) and advanced stage (stage III) data sets. Comparing the INCA and TCIA data sets, we found that number of patients was similar for stage I but there were more INCA patients in stage II and more TCIA patients in stage III. The INCA data set had more patients in the early stages, whereas the distribution of patients was more homogeneous across the three stages in the TCIA data set. Figure 4 shows the DL model performance (loss function and DSC segmentation accuracy of 0.68) using the TCIA data set during the fine-tuning step to learn and test scripts of the first detection and segmentation process.

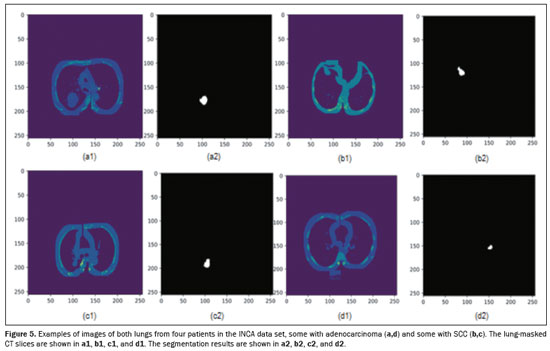

Figure 5 shows the semantic segmentation result obtained and applied as input to the preprocessed image of the DL classification histological subtype step to four tumors, two classified as adenocarcinoma and two classified as SCC, located on both lungs. Each CT scan had an execution time of approximately 6 s to predict all 600 slices/patient.

For the test/validation image data set, the proposed DL model showed greater than 90% sensitivity and specificity for detecting both main histologic subtypes of NSCLC. After the fine-tuning step, the model was found to have a sensitivity of 91.7% and 90.4% for adenocarcinoma and SCC, respectively, with a specificity of 99.3% and 99.5%, respectively, and an accuracy of 84.5% and 89.6%, respectively, in INCA patients. The number of patients whose data were used in order to train the proposed DL model was balanced with the incidence rate by histological subtype (adenocarcinoma and SCC) and, as indicated above, the accuracy was 5% better for classifying SCC.

DISCUSSIONDuring the training of the DL model proposed in our study, a DSC of 0.68 was achieved, compared with the 0.84 reported by Primakov et al.

(13), who did not differentiate among NSCLC subtypes. After fine-tuning, our model achieved a 99% specificity in tumor detection, which aligns with the results obtained by Lei et al.

(12) and indicates effective differentiation between images of tumors and non-tumors, with minimal false-positive results.

The TCIA data set used for training our model included 21 cases of adenocarcinoma, 37 cases of SCC, and 58 cases of other NSCLC subtypes, whereas the INCA data set use for external validation included 62 cases of adenocarcinoma and 42 cases of SCC. The predominance of SCC over adenocarcinoma in the TCIA data set constitutes a significant mismatch, given that adenocarcinoma is more prevalent in the population of Brazil

(2,30). To address this imbalance, the model should be fine-tuned with a more balanced data set, ideally with twice as many cases of adenocarcinoma as cases of SCC. Such adjustments are crucial for increasing accuracy and addressing population-specific biases

(25,26).

The present study utilized adequate preprocessing with a lung mask, allowing satisfactory results to be obtained within 60 epochs, much fewer than the 100–500 epochs required in similar studies

(24). That level of efficiency was due to improved preprocessing and training techniques. The proposed method also demonstrated good agreement with physician reports on electronic medical records for INCA patients, in keeping with the results obtained by Lei et al.

(12) and Paing et al.

(14).

For identifying adenocarcinoma and SCC, our detection algorithm achieved an accuracy comparable to that reported by Aydın et al.

(27), with a sensitivity similar to that reported by Primakov et al.

(13). The results of the present study, distinguishing between the main histologic subtypes, aligns well with the findings of nondichotomous classification studies such as those conducted by Chaunzwa et al.

(28) and Pang et al.

(29). After testing various image sizes, we chose 224 × 224 pixels, which is suitable for the VGG16 network input, the same input as the original network architecture.

Overall, increasing accuracy in classifying NSCLC histology subtypes remains challenging, particularly for multiple subtypes, due to the need for larger and more diverse data sets. Classification accuracy tends to improve with a larger number of cases for each subtype in the data set.

Our study has some limitations. First, the proposed DL model was not trained to make an initial diagnosis of cancer, instead being trained and tested on patients already diagnosed with neoplasia, with the aim of differentiating between histologic subtypes. In addition, the model was tested on data from a single scanner. Although that might be considered a limitation, it highlights the feasibility of applying DL for histologic differentiation in this controlled setting.

Upregulated expression of programmed death-ligand 1 protein is important for some immunotherapies. Therefore, future studies could improve upon our research considerably by incorporating genomics.

We believe that our findings could facilitate the development of advanced diagnostic tools to improve health care, mainly in regions with limited access to medical specialists. Another area in which our model could be useful is in the diagnosis of lung cancer due to electronic cigarette use, the incidence of which has been increasing, particularly among young people.

CONCLUSIONThis work offers a way to classify the main histologic subtypes of NSCLC in a specific population, with a DSC similar to or better than that obtained in previous studies of this topic. This approach could be useful in the automated classification of lung cancer subtypes in local populations, using DL networks trained on public image data sets. The use of the DL model proposed here could also help oncological radiologists in image analysis and processing.

REFERENCES1. World Health Organization. Cancer. [cited 2024 Jul 26]. Available from:

https://www.who.int/news-room/fact-sheets/detail/cancer.

2. Ministério da Saúde. Instituto Nacional de Câncer. Estimativa 2023: incidência de câncer no Brasil. [cited 2024 Jul 26]. Available from:

https://www.inca.gov.br/publicacoes/livros/estimativa-2023-incidencia-de-cancer-no-brasil.

3. American Cancer Society. Cancer Facts & Figures 2022. Atlanta, GA: American Cancer Society; 2022.

4. Lima KYN, Cancela MC, Souza DLB. Spatial assessment of advanced-stage diagnosis and lung cancer mortality in Brazil. PLoS One. 2022;17:e0265321.

5. Rajkomar A, Dean J, Kohane I. Machine learning in medicine. N Engl J Med. 2019;380:1347–58.

6. Gu Y, Kumar V, Hal LO, et al. Automated delineation of lung tumors from CT images using a single click ensemble segmentation approach. Pattern Recognit. 2013;46:692–702.

7. Koenigkam-Santos M, Ferreira Júnior JR, Wada DT, et al. Artificial intelligence, machine learning, computer-aided diagnosis, and radiomics: advances in imaging towards precision medicine. Radiol Bras. 2019;52:387–96.

8. Dunn B, Pierobon M, Wei Q. Automated classification of lung cancer subtypes using deep learning and CT-scan-based radiomic analysis. Bioengineering (Basel). 2023;10:690.

9. Pasini G, Stefano A, Russo G, et al. Phenotyping the histopathological subtypes of non-small-cell lung carcinoma: how beneficial is radiomics? Diagnostics (Basel). 2023;13:1167.

10. Han Y, Ma Y, Wu Z, et al. Histologic subtype classification of non-small cell lung cancer using PET/CT images. Eur J Nucl Med Mol Imaging. 2021;48:350–60.

11. Tian P, Wang Y, Li L, et al. CT-guided transthoracic core needle biopsy for small pulmonary lesions: diagnostic performance and adequacy for molecular testing. J Thorac Dis. 2017;9:333–43.

12. Lei Y, Wang T, Jeong JJ, et al. Automated lung tumor delineation on positron emission tomography/computed tomography via a hybrid regional network. Med Phys. 2023;50:274–83.

13. Primakov SP, Ibrahim A, van Timmeren JE, et al. Automated detection and segmentation of non-small cell lung cancer computed tomography images. Nat Commun. 2022;13:3423.

14. Paing MP, Hamamoto K, Tungjitkusolmun S, et al. Automatic detection and staging of lung tumors using locational features and double-staged classifications. Appl Sci. 2019;9:2329.

15. Nicholson AG, Tsao MS, Beasley MB, et al. The 2021 WHO classification of lung tumors: impact of advances since 2015. J Thorac Oncol. 2022;17:362–87.

16. Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. [cited 2024 Sep 4]. Available from:

https://doi.org/10.1007/978-3-319-24574-4_28.

17. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. [cited 2024 Sep 4]. Available from:

https://doi.org/10.48550/arXiv.1409.1556.

18. Deng J, Dong W, Socher R, et al. ImageNet: a large-scale hierarchical image database. In: Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009. p. 248–55.

19. Li L, Liu Z, Huang H, et al. Evaluating the performance of a deep learning-based computer-aided diagnosis (DL-CAD) system for detecting and characterizing lung nodules: comparison with the performance of double reading by radiologists. Thorac Cancer. 2019; 10:183–92.

20. Clark K, Vendt B, Smith K, et al. The Cancer Imaging Archive (TCIA): maintaining and operating a public information repository. J Digit Imaging. 2013;26:1045–57.

21. Hashimoto F, Kakimoto A, Ota N, et al. Automated segmentation of 2D low-dose CT images of the psoas-major muscle using deep convolutional neural networks. Radiol Phys Technol. 2019;12:210–5.

22. Nemoto T, Futakami N, Kunieda E, et al. Effects of sample size and data augmentation on U-Net-based automatic segmentation of various organs. Radiol Phys Technol. 2021;14:318–27.

23. Anthimopoulos M, Christodoulidis S, Ebner L, et al. Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans Med Imaging. 2016;35:1207–16.

24. Isensee F, Jaeger PF, Kohl SAA, et al. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. 2021;18:203–11.

25. Gao Y, Song F, Zhang P, et al. Improving the subtype classification of non-small cell lung cancer by elastic deformation based machine learning. J Digit Imaging. 2021;34:605–17.

26. Kuruvilla J, Gunavathi K. Lung cancer classification using neural networks for CT images. Comput Methods Programs Biomed. 2014;113:202–9.

27. Aydın N, Çelik Ö, Aslan AF, et al. Detection of lung cancer on computed tomography using artificial intelligence applications developed by deep learning methods and the contribution of deep learning to the classification of lung carcinoma. Curr Med Imaging. 2021; 17:1137–41.

28. Chaunzwa TL, Hosny A, Xu Y, et al. Deep learning classification of lung cancer histology using CT images. Sci Rep. 2021;11:5471.

29. Pang S, Meng F, Wang X, et al. VGG16-T: a novel deep convolutional neural network with boosting to identify pathological type of lung cancer in early stage by CT images. Int J Comput Intell Syst. 2020;13:771–80.

30. Oliveira FRA, Santos AO, Lima MCL, et al. The ratio between the whole-body and primary tumor burden, measured on

18F-FDG PET/CT studies, as a prognostic indicator in advanced non-small cell lung cancer. Radiol Bras. 2021;54:289–94.

1. Instituto Alberto Luiz Coimbra de Pós-Graduação e Pesquisa de Engenharia – Universidade Federal do Rio de Janeiro (COPPE-UFRJ), Rio de Janeiro, RJ, Brazil

2. Instituto Nacional de Câncer (INCA), Rio de Janeiro, RJ, Brazil

a.

https://orcid.org/0009-0006-3696-2764 b.

https://orcid.org/0009-0002-7947-9116 c.

https://orcid.org/0000-0003-3418-9676 d.

https://orcid.org/0000-0001-8114-7255Correspondence: Marcos Antonio Dias Lima

Instituto Nacional de Câncer

Praça da Cruz Vermelha, 25, Centro

Rio de Janeiro, RJ, Brazil, 20230-130

Email:

mdlima44@gmail.com

Received in

August 19 2024.

Accepted em

February 10 2025.

Publish in

May 14 2025.

|

|