Radiologia Brasileira - Publicação Científica Oficial do Colégio Brasileiro de Radiologia

AMB - Associação Médica Brasileira CNA - Comissão Nacional de Acreditação

Vol. 53 nº 1 - Jan. /Feb. of 2020

Vol. 53 nº 1 - Jan. /Feb. of 2020

|

ORIGINAL ARTICLE

|

|

Breast elastography: diagnostic performance of computer-aided diagnosis software and interobserver agreement |

|

|

Autho(rs): Eduardo F. C. Fleury1,a; Karem Marcomini2,b |

|

|

Keywords: Ultrasonography; Elasticity imaging techniques; Breast; Diagnosis, computer-assisted; Observer variation. |

|

|

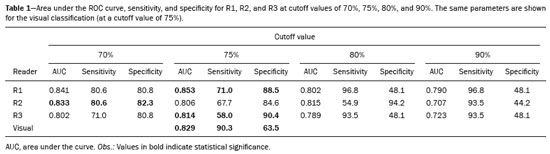

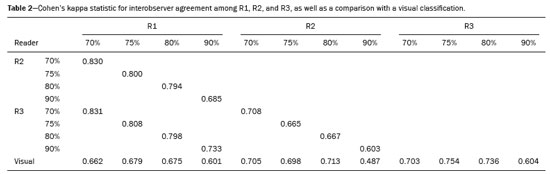

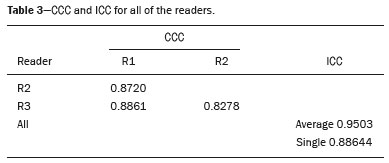

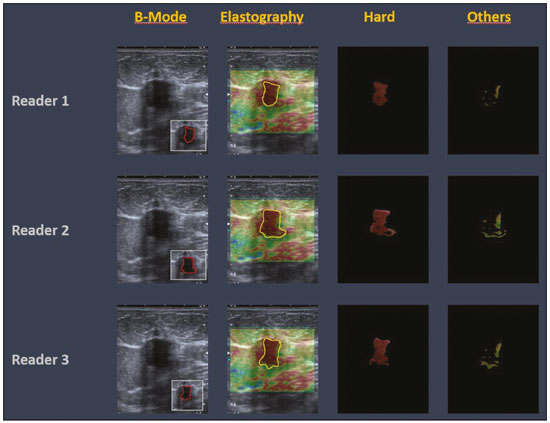

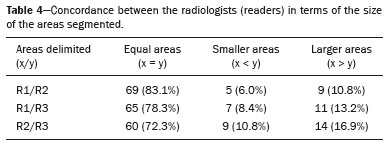

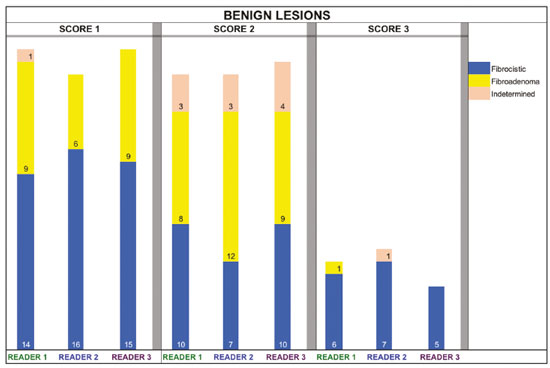

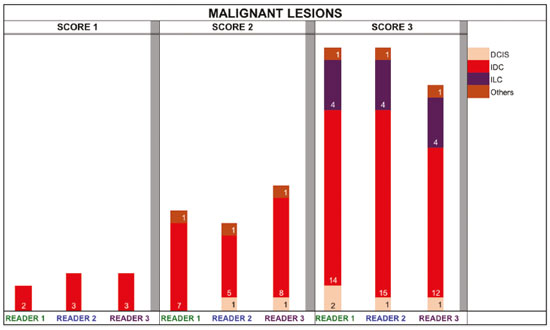

Abstract: INTRODUCTION

The use of artificial intelligence as an auxiliary tool for classifying breast masses detected by imaging methods is currently under discussion. With the aim of optimizing interobserver agreement and diagnostic accuracy, technologies such as deep learning and machine learning are being tested(1-4). For strain elastography, the main limitation reported is the lack of standardization of the technique and, most importantly, the lack of defined criteria for the final classification of lesions. In addition, poor interobserver agreement could be a major limitation(5,6). Some authors have reported that a dedicated strain elastography computer-assisted diagnosis (SE-CAD) system is able to classify masses by means of color stratification(7,8). According to the lexicon in the 5th edition of the Breast Imaging Reporting and Data System (BI-RADS), masses can be classified by elastography as soft, intermediate, or hard, although there is no clear standardization of the classification(9). As proposed in the BI-RADS lexicon, hard lesions are typically associated with malignancy, whereas soft and intermediate lesions should be considered as benign. The reason for this hard aspect is that malignancy is most often associated with high cellularity and intense peritumoral desmoplasia. Therefore, hard masses are considered positive for malignancy, whereas intermediate and soft masses are considered negative. Determination of the best cutoff value is extremely important for the classification of masses, given that it will be the standard for software operation and will provide the best diagnostic accuracy. The aim of this study was to determine the cutoff value for a dedicated SE-CAD system that allows the best classification of masses according to the BI-RADS lexicon. MATERIALS AND METHODS We evaluated 83 consecutive breast masses in 83 patients referred for percutaneous breast biopsy between March and May of 2016. On the basis of the histological analysis, 31 of those masses had been categorized as malignant and 52 had been categorized as benign. The study was approved by the local research ethics committee (Protocol no. 012664/2016), and all patients gave written informed consent. Between January and March of 2017, we evaluated newly released software, testing several cutoff values for classifying the masses. Three radiologists, all with experience in elastography, participated in the study: one with 15 years of experience, designated reader 1 (R1); one with 2 years of experience, designated R2; and one with 8 years of experience, designated R3. All images were acquired by R2, who employed a Toshiba ultrasound system (Aplio 300; Toshiba, Tokyo, Japan), and were analyzed with commercially available software for strain elastography. After B-mode scans had been acquired, elastography was performed as previously described(10,11). In brief, we used the first compression-decompression cycle, during which we can determine lesion stiffness on the basis of the cumulative energy, to obtain the elastography image. The native ultrasound elastography software has image quality criteria that allow the best image to be selected. The images obtained were imported into a picture archiving and communication system. On the basis of the elastography images, the radiologists gave each lesion a score of 1 (soft), 2 (intermediate), or 3 (hard). All patients underwent percutaneous biopsy, and the results were used as the gold standard for comparative purposes. The three radiologists, all of whom were blinded to the elastography and histological results, evaluated the images. An SE-CAD system was used for classification of the masses. Each radiologist manually segmented the mass in the B-mode image. The software automatically transports the selected area to the elastography image, where it is stratified by rigidity (Figure 1).  Figure 1. Example of image stratification by reader, showing the area segmented by each radiologist. In B-mode images, the lesion was manually segmented by the readers (small frame). This information was automatically transported to the elastography image. The software extracts and separates the hard and soft components of the lesion. The red outline indicates the area segmented by the radiologist. It is of note that the radiologist with more experience (reader 1) segmented an area smaller than that segmented by the radiologist with less experience (reader 3). The outlined area is automatically transferred to the elastography image without any intervention by the radiologist. The hard area is separated from the others. SE-CAD color stratification Elastography images were converted from the red-green-blue (RGB) color space to the Commission Internationale de l’Éclairage L*a*b* (CIELab) color space (where L* is the lightness from black to white, a* is the range from green to red, and b* is the range from blue to yellow), in order to extract the corresponding hard areas, as described in previous studies(7,8). To standardize the stratification, we adopted red to indicate hard lesions and blue to indicate soft lesions, a strategy we believe to be the most intuitive. After the images had been converted from the RGB to the CIELab color space, the Otsu method was applied in the a* channel to define and quantify hard areas(8). Masses were classified according to the proportion of hard area within the mass, adopting Z as the cutoff value, where the Zs tested were 70%, 75%, 80%, and 90%(12). In the final analyses, the masses were classified as follows: soft (< 50% of hard area); intermediate (50-Z% of hard area); or hard (> Z% of hard area). From the area segmented by the radiologist, the SE-CAD system classified the masses automatically. One month after the radiologists performed the SE-CAD classification, the lesions were classified according to the visual pattern, following the classification guidelines proposed in previous studies(11,13). The visual classification also consists of three categories based on the proportion of hard area within the mass: soft (< 50%); intermediate (50-90%); and hard (> 90%). The three radiologists were also blinded to the SE-CAD results, and the final visual classification was determined by consensus. Diagnostic accuracy, sensitivity, and specificity, together with the area under the receiving operating characteristic curves, were calculated for each radiologist. For the SE-CAD and visual classifications, interobserver agreement was assessed by calculating Cohen’s kappa statistic, the intraclass correlation coefficient (ICC), and the concordance correlation coefficient (CCC). For Cohen’s kappa statistic, the CCC, and the ICC, interobserver agreement was classified as slight (0.0-0.2), fair (0.2-0.4), moderate (0.4-0.6), substantial (0.6-0.8), or excellent (0.8-1.0). Values of p < 0.05 were considered statistically significant. The radiologist-segmented areas were also compared. Areas were considered equal when the variation between two radiologists was less than 20%. When areas were considered unequal, they were categorized as smaller or larger. When the product of dividing one area by the other was < 0.8, the area was categorized as smaller, whereas it was categorized as larger when the product was > 1.2. For statistical analysis, we used MedCalc Statistical Software, version 19.1.3 (MedCalc Software, Ostend, Belgium). RESULTS At the beginning of the study, we chose to compare cutoff (Z) values of 70%, 80%, and 90%. The initial results indicated that a Z of 70% had the best diagnostic accuracy (Table 1). Because of the wide range between Z values of 70% and 80%, we decided to perform a test with an intermediate Z of 75%, which improved diagnostic accuracy for the more experienced radiologists (R1 and R3). The best diagnostic accuracy was achieved by R1, followed by R3 and R2, although the difference was not statistically significant at p < 0.005. There was also no statistically significant difference between the SE-CAD and visual classifications for any of the three radiologists. The computation required in order to obtain the study data is of low complexity, and the results are produced almost instantaneously (in less than 1 s). When a cutoff value of Z = 75% was applied, the level of interobserver agreement for the SE-CAD classification, as determined by calculating Cohen’s kappa statistic, was excellent between R1 and R2, as well as between R1 and R3, and was strong between R2 and R3. When the same cutoff value was applied to the visual classification, the level of interobserver agreement was strong for all of the readers (Table 2). The CCC and ICC results demonstrated excellent agreement between all of the readers (Table 3). When analyzing the radiologist-segmented areas, we found that agreement was best between R1 and R2, at 83.1%, compared with 78.3% between R1 and R3 and 72.3% between R2 and R3 (Table 4).  Figures 2 and 3 present the distribution, by radiologist score, of the lesions histologically classified as benign and malignant, respectively. For comparative purposes, benign lesions were divided into fibroadenoma, fibrocystic changes, and indeterminate lesions, such as papillary lesions and atypical ductal hyperplasia (Figure 2). Malignant lesions were divided into invasive ductal carcinoma, invasive lobular carcinoma, ductal carcinoma in situ, and other malignant neoplasms, such as papillary carcinomas and mucinous carcinoma (Figure 3).  Figure 2. Distribution of benign lesions for readers 1, 2, and 3, according to the elastography score: 1 indicates a soft lesion; 2 indicates an intermediate lesion; and 3 indicates a hard lesion. The absolute numbers for each of the most common lesions, as determined by histology, are shown within the bars, where blue indicates a fibrocystic change, yellow indicates a fibroadenoma, and pink indicates an indeterminate lesion, such as a papillary lesion.  Figure 3. Distribution of malignant lesions for readers 1, 2, and 3 according to the elastography score: 1 indicates a soft lesion; 2 indicates an intermediate lesion; and 3 indicates a hard lesion. The absolute numbers for each of the most common lesions, as determined by histology, are shown within the bars, where pink indicates ductal carcinoma in situ, red indicates invasive ductal carcinoma, purple indicates invasive lobular carcinoma, and brown indicates other malignant neoplasms, such as mucinous and papillary carcinomas. DISCUSSION In clinical practice, the main limitations of breast ultrasound are poor interobserver agreement and limited reproducibility of the results. With the introduction of digital technology and the recent revolution in the areas of technology and computer science, the application of CAD systems came to be studied as an aid in the diagnosis of breast lesions(14,15). Breast ultrasound is considered to have low specificity, which limits its implementation in breast cancer screening programs. The introduction of breast elastography findings into the BI-RADS lexicon increased the specificity of the examination, resulting in better diagnostic performance. However, poor interobserver agreement and limited reproducibility continue to restrict the utility of the technique(16). Through the use of CAD classification systems for breast masses, it is possible to standardize the classification criteria and improve interobserver agreement. Recent studies have shown that CAD systems can bring the performance of less experienced readers closer to that of those with more experience. That is especially due to the fact that such systems do not use subjective criteria, as are typically employed in the visual classification of breast masses(13,16,17). Currently, strain elastography is the most widely available and affordable elastography method in clinical practice. However, criticism regarding its reproducibility and lack of standardization in the classification of breast masses has discouraged its use(18). A CAD system could overcome that limitation. Optimized results of a CAD system are directly related to its calibration; the better calibrated it is, the better its results will be. For the evaluation of breast masses, strain elastography and shear wave elastography have similar sensitivity and specificity. However, shear wave elastography is limited in its ability to evaluate superficial lesions and is less widely available because it is more expensive. For superficial organs, strain elastography is preferred, whereas shear wave elastography is more appropriate for deep organs such as the liver. Some studies have used CAD systems to classify breast masses by elastography, although without employing the BI-RADS lexicon classification and without comparing the best cutoff values(7,18). In the study conducted by Zhang et al.(7), a cutoff value of 80% hard areas was used but the authors adopted a 5-point classification system(7,19). In the present study, cutoff values of 70%, 80%, and 90% hard areas were initially tested in the areas segmented by the radiologists. In the first analysis, the best results were obtained with a cutoff value of 70%. An additional test was then performed with an intermediate cutoff value of 75%, which was found to provide the best diagnostic accuracy. In the present study, manual segmentation of breast masses was used because we believe that classification of breast masses is under the purview of the physician(13). In using the software, radiologists were instructed to segment the area of the mass that was most hypoechoic, delineating the area immediately inside the margins displayed in B mode. The objective was to minimize contamination of the sample by normal breast tissue. We found good agreement among the readers in terms of the areas segmented. Training radiologists to delineate only areas of lower echogenicity, respecting the margins, could contribute to improving the diagnostic accuracy of CAD systems. It is also noteworthy that the lesions were delineated after the ultrasound examination, during the postprocessing period, when the radiologist writes the final report, and the scan time was therefore not increased. The time spent delineating the lesions was similar (< 10 s) for all three radiologists. For the most experienced radiologist (R1), diagnostic accuracy was better when the SE-CAD system was used than when the consensus visual classification was used. For R2 and R3, diagnostic accuracy was lower when the consensus visual classification was used, although the differences were not statistically significant. This shows uniformity in the final classification by the readers. The high levels of agreement among the readers demonstrate the applicability of the method to improve reproducibility and standardize classifications, especially for less experienced readers. The use of CAD software to classify lesions may improve diagnostic accuracy for less experienced radiologists and make the results more homogeneous. When analyzing the distribution of the lesions according to the classification given by each reader, we observed that the second most experienced reader (R3) classified fewer malignant lesions as having a score of 3 than did the other readers, although the difference was not statistically significant. That is due to the complex appearance of malignant lesions on ultrasound images, on which it is often difficult to distinguish between healthy and pathological tissue. As can be seen in Figure 3, some malignant lesions were classified as soft (with a score of 1). All of the carcinomas classified as soft were high-grade lesions containing areas of necrosis. It should be borne in mind that elastography does not differentiate benign lesions from malignant lesions, rather differentiating between soft and hard tissue. High-grade carcinomas with necrosis are expected to be classified as soft lesions, whereas low-grade lesions accompanied by desmoplasia are expected to be classified as hard. However, all of the false-negative elastography results were for tumors with a morphology that had raised the suspicion of malignancy on B-mode ultrasound. In such cases, the morphology of the lesion should be considered in conjunction with the elastography findings in order to make the final classification in accordance with the BI-RADS lexicon. Elastography is a tool that is complementary to ultrasound in the evaluation of breast masses. It should not be used, in isolation, to differentiate between benign and malignant lesions. However, combining elastography results with those of ultrasound can improve the performance of ultrasound in the diagnosis of breast masses. Our study has some limitations. First, all of the images were acquired by the same radiologist. That is due to the study objective, which was not to standardize the technique (which has been widely studied) but to standardize the classification system. The least experienced radiologist was elected, in order to demonstrate the ease of obtaining quality images by strain elastography. Another limitation is the relatively small sample size. However, we opted for prospective, consecutive image acquisition, which accurately reproduces the daily clinical routine at our institution. An additional limitation is that the consensus final visual classification adopted as the gold standard might have introduced a bias of the more experienced over the less experienced. Our results demonstrate that the use of a cutoff value of 75% for the SE-CAD system of classifying breast masses provides high diagnostic accuracy and interobserver agreement among radiologists. REFERENCES 1. Moon WK, Chang SC, Huang CS, et al. Breast tumor classification using fuzzy clustering for breast elastography. Ultrasound Med Biol. 2011;37:700-8. 2. Jalalian A, Mashohor SB, Mahmud HR, et al. Computer-aided detection/diagnosis of breast cancer in mammography and ultrasound: a review. Clin Imaging. 2013;37:420-6. 3. Cho KR, Seo BK, Woo OH, et al. Breast cancer detection in a screening population: comparison of digital mammography, computer-aided detection applied to digital mammography and breast ultrasound. J Breast Cancer. 2016;19:316-23. 4. Chen CM, Chou YH, Han KC, et al. Breast lesions on sonograms: computer-aided diagnosis with nearly setting-independent features and artificial neural networks. Radiology. 2003;226:504-14. 5. Hooley RJ, Scoutt LM, Philpotts LE. Breast ultrasonography: state of the art. Radiology. 2013;268:642-59. 6. Itoh A, Ueno E, Tohno E, et al. Breast disease: clinical application of US elastography for diagnosis. Radiology. 2006;239:341-50. 7. Zhang X, Xiao Y, Zeng J, et al. Computer-assisted assessment of ultrasound real-time elastography: initial experience in 145 breast lesions. Eur J Radiol. 2014;83:e1-7. 8. Marcomini KD, Fleury EFC, Oliveira VM, et al. Agreement between a computer-assisted tool and radiologist to classify lesions in breast elastography images. Proc SPIE 10134, Medical Imaging 2017: Computer Aidee Diagnosis 101342T. 9. American College of Radiology. ACR BI-RADS(r) Atlas - Breast Imaging Reporting and Data System. 5th ed. Reston, VA: American College of Radiology; 2013. 10. Fleury EF, Fleury JC, Piato S, et al. New elastographic classification of breast lesions during and after compression. Diagn Interv Radiol. 2009;15:96-103. 11. Fleury EF. The importance of breast elastography added to the BI-RADS(r) (5th edition) lexicon classification. Rev Assoc Med Bras. 2015;6:313-6. 12. Marcomini KD, Fleury EFC, Schiabel H, et al. Proposal of semi- automatic classification of breast lesions for strain sonoelastography using a dedicated CAD system. In: Tingberg A, Lang K, Timberg P, editors. Breast imaging. IWDM 2016, Lecture Notes in Computer Science, vol 9699. Springer, Cham. 13. Fleury EFC, Gianini AC, Marcomini K, et al. The feasibility of classifying breast masses using a computer-aided diagnosis (CAD) system based on ultrasound elastography and BI-RADS lexicon. Technol Cancer Res Treat. 2018;17:1533033818763461. 14. Huang YS, Takada E, Konno S, et al. Computer-aided diagnosis in 3-D breast elastography. Comput Methods Programs Biomed. 2018;153:201-9. 15. Moon WK, Huang YS, Lee YW, et al. Computer-aided tumor diagnosis using shear wave breast elastography. Ultrasonics. 2017; 78:125-33. 16. Cho E, Kim EK, Song MK, et al. Application of computer-aided diagnosis on breast ultrasonography: evaluation of diagnostic performances and agreement of radiologists according to different levels of experience. J Ultrasound Med. 2018;37:209-16. 17. Wu WJ, Moon WK. Ultrasound breast tumor image computer-aided diagnosis with texture and morphological features. Acad Radiol. 2008;15:873-80. 18. Barr RG, Zhang Z. Shear-wave elastography of the breast: value of a quality measure and comparison with strain elastography. Radiology. 2015;275:45-53. 19. Duma MM, Chiorean AR, Chiorean M, et al. Breast diagnosis: concordance analysis between the BI-RADS classification and Tsukuba sonoelastography score. Clujul Med. 2014;87:250-7. 1. Faculdade de Ciências Médicas da Santa Casa de São Paulo, São Paulo, SP, Brazil 2. IBCC - Instituto Brasileiro de Controle do Câncer, São Paulo, SP, Brazil a. https://orcid.org/0000-0002-5334-7134 b. https://orcid.org/0000-0002-8594-4654 Correspondence: Dr. Eduardo F. C. Fleury Rua Maestro Chiaffarelli, 409, Jardim Paulista São Paulo, SP, Brazil, 01432-030 Email: edufleury@hotmail.com Received March 09, 2019 Accepted after revision July 12, 2019 Publication date: 02/01/2020 |

|

Av. Paulista, 37 - 7° andar - Conj. 71 - CEP 01311-902 - São Paulo - SP - Brazil - Phone: (11) 3372-4544 - Fax: (11) 3372-4554